Let’s take the example of an animal recognition and classification system. We begin with a simple Cat-Dog Classifier. This will be our version 1 of the model. Let’s say we have trained a copy of the model to recognize Koalas as well, so our version 2 is a Cat-Dog-Koala Classifier. How would we approach the deployment of the newer version of our model?

Model Deployment Strategies

Big Bang — Recreate

WHAT — This form of deployment is a “from scratch” style of deployment. You have to tear down the existing deployment for the new one to be deployed.

WHERE — Generally acceptable in development environments — you can recreate the deployments as many times as you wish with different configurations. Usually, a deployment pipeline tears down the existing resources and creates new updates in its place.

There is a certain amount of downtime involved with this kind of deployment. Not acceptable in our current pace of ML development in organizations.

In our example, we would replace version 1 with version 2; this includes replacing all related infrastructure and library setups.

Rolling Updates

They see me rolling…They hating…🎵

WHAT — Rolling updates involve updating all instances of your model/application one by one. Say if you have 4 pods of the application running currently and you activate a rolling update with a new version of your model. One by one the older pods are replaced with a newer ones. There is zero downtime in this approach.

WHERE — Useful when you want to make a quick update of your entire model line with a new version. Also allows you to roll back to an older version when needed. Mostly used in testing or staging environments where teams need to test out the new versions of the models.

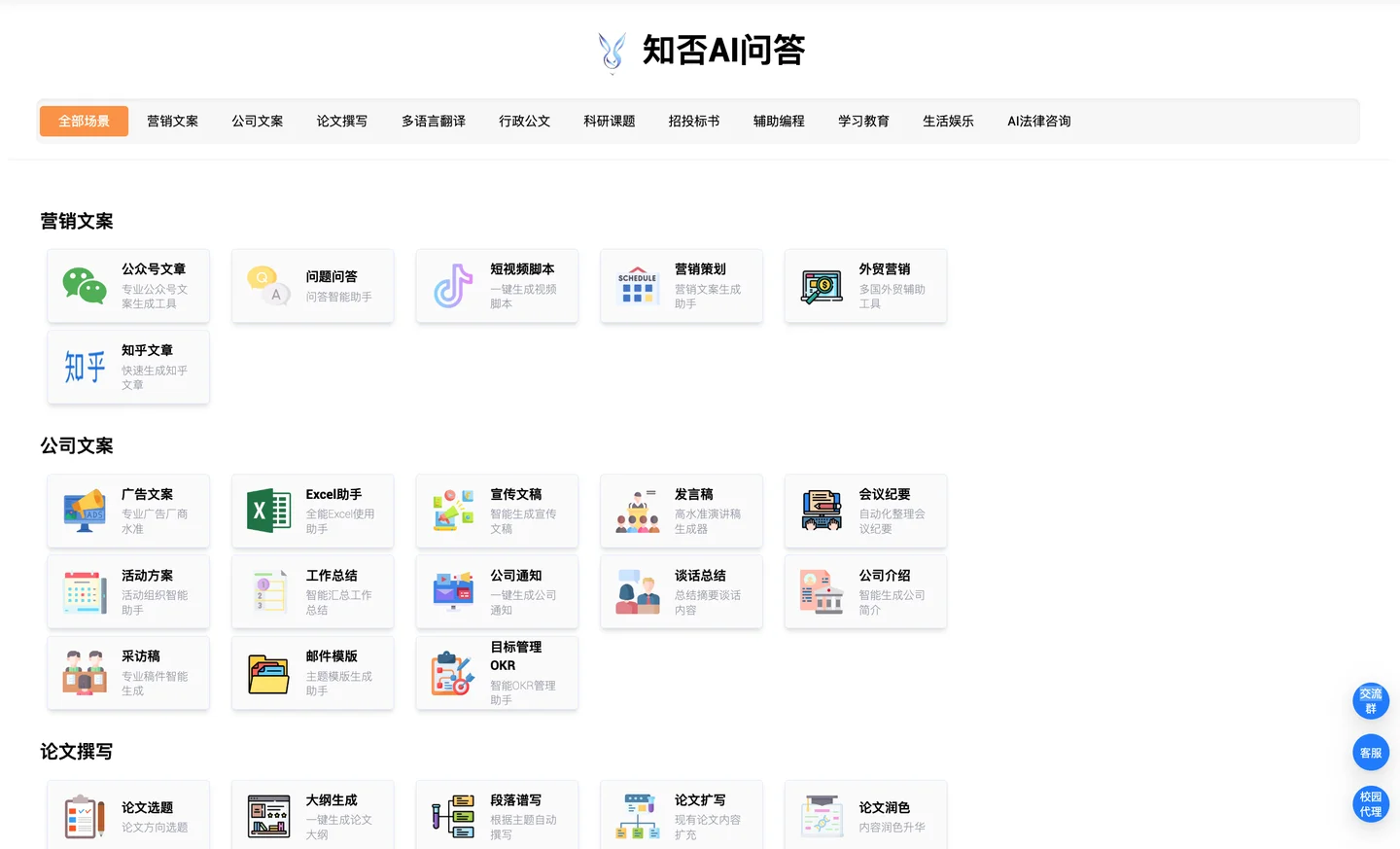

Canary Deployment is usually performed in staging and production when the teams need to understand the performance of the new updated models. This can be done in two ways:

Canary Rolling Deployment Canary Parallel Deployment

Canary Deployment (Image by Author) Rolling Deployment

Parallel Deployment

creates a smaller set of new instances alongside the existing setup and sends a percentage of user requests to these pods.

Depending on the traffic load and infra availability, you can choose which Canary implementation you wish to set up.The user requests are usually tagged via headers and then the load balancer settings are configured to send them to their appropriate destination.

Meaning a set of users are chosen

to view the updates, and

the same set of users will see the updates every time. User requests aren’t randomly sent to the new pods. Canary Deployments have session affinity.

- In our example, let’s say 10% of select users can submit their image to the model and it would classify them with the Koala option, the rest of the users can only use the binary classifier.

- A/B Testing

This methodology is most used in User Experience research, to evaluate what the users prefer. In the ML scenario, we can use this style of deployment to understand what users prefer and which model might work better for them. WHERE —

Mostly employed in the deployment of recommendation systems worldwide. Depending on the demographics, say an online market site employs two different types of recommendation engines, one might serve a general set of users and one serves a specific geographic locality — with more native language support. The engineers can determine after a length of time, which engine gives a smoother experience for the users. Why we need A/B Testing (Image by Author)

In our example, say we deploy the Cat-Dog classifier globally, but we deploy versions 1 and 2 in Australia — Pacific Islands region, where user requests are randomly sent to version 2. Imagine retraining version 2 to recognize the more local breeds of animals and deploying it, which version do you think the people in Australia will prefer?

NOTE: You might wonder what's the difference between Canary and A/B Testing . The main differences are:- Canary is session affinity-based, mostly the same set of users will see the updated model whereas, in A/B Testing, the users are randomly sent to different versions.- Canary is specifically to test if the app or model is working as expected, A/B is more to understanding user experience.- The Canary user ratio never goes beyond 50, a very small percentage of the user request (less than 35% ideally) is sent to the newer testing version. Shadow👻 WHAT —

Shadow Deployment is used in production systems to test a newer version of models with production data. A copy of the user request is made and sent to your updated model, but the existing system gives the response.

WHERE —

Say you have a production system with high traffic, and to verify how your updated model might handle production data and traffic load, we deploy the updated model in a shadow mode. Every time a request is sent to the model, a copy is sent to the updated version. The response is only sent back by the existing model, not the updated one. Shadow Deployment (Image by Author)

This type of deployment is used to understand the production load, traffic, and model performance. Primarily used in high-volume prediction services. The architectural and design complexity increases with this kind of deployment. We have to include service meshes, request routing and complex database designs based on the use case. 在我们的示例中,我们可能将版本2部署为影子部署,以了解版本2如何处理生产负载,也了解我们从哪里获得更多的考拉分类请求😉或者任何特定类型的模型请求模式。

In our example, say we deploy the Cat-Dog classifier globally, but we deploy versions 1 and 2 in Australia — Pacific Islands region, where user requests are randomly sent to version 2. Imagine retraining version 2 to recognize the more local breeds of animals and deploying it, which version do you think the people in Australia will prefer?

NOTE:

You might wonder what's the difference between Canary and A/B Testing. The main differences are:- Canary is session affinity-based, mostly the same set of users will see the updated model whereas, in A/B Testing, the users are randomly sent to different versions.- Canary is specifically to test if the app or model is working as expected, A/B is more to understanding user experience.- The Canary user ratio never goes beyond 50, a very small percentage of the user request (less than 35% ideally) is sent to the newer testing version.

Shadow👻

WHAT — Shadow Deployment is used in production systems to test a newer version of models with production data. A copy of the user request is made and sent to your updated model, but the existing system gives the response.

WHERE — Say you have a production system with high traffic, and to verify how your updated model might handle production data and traffic load, we deploy the updated model in a shadow mode. Every time a request is sent to the model, a copy is sent to the updated version. The response is only sent back by the existing model, not the updated one.

This type of deployment is used to understand the production load, traffic, and model performance. Primarily used in high-volume prediction services. The architectural and design complexity increases with this kind of deployment. We have to include service meshes, request routing and complex database designs based on the use case.

In our example, we might deploy version 2 as shadow deployment to understand how version 2 might handle production load, also understand from where are we getting more koala classification requests 😉 or any specific kind of request pattern for the model.